Notice: Read the first part Structuring Talent data with Neo4j and Gemini

When Google announced the GenAI Toolbox, I immediately knew it was an important step. Finally, a structured, open-source framework that allows AI agents to work with databases like PostgreSQL and Neo4j using function calling. No more hacking together messy SQL generation inside prompts.

But as I dug into the available examples, something stood out. They were simple—too simple for anything serious. One query, one response, no memory, no critical thinking—just a basic function call per user input.

This got me thinking:

What would it take to build a real agent?

One that could handle multi-step conversations, reason about the data in a graph, make smart decisions, and recover from errors?

In this post, I’ll show you how I took the GenAI Toolbox approach and extended it — building a production-grade Neo4j agent designed for real-world use.

The Starting Point: Google’s Example

First, credit where it’s due: The GenAI Toolbox project is a major enabler. In particular following the install locally example found at the MCP Toolbox for Databases walks you through how to get up and running with a SQL database and basic queries.

At the Neo4j example from Neo4j Blog we can see how easy it is to define a graph source, write a few Cypher queries as tools, and build a basic agent.

Following and combining this two guides you can end up with an Agent that can answer simple questions, like “list all hotels”. The examples thought are stopping there.

Limitations at this point are pretty obvious, like no multi-turn conversations, no context memory, no advanced results synthesis, no serious planning across steps, no error handling.

Good for a hello-world demo

What I Wanted to Build Instead

My Goals were simple: ALL I needed was

- Multi-turn dialogue, with memory

- Dynamic reasoning across graph relationships (Skills, Companies, Projects, Clients in our HR example)

- Safe execution with retries and error handling

- Fully asynchronous for real workloads

- Scalable to more tools and deeper queries

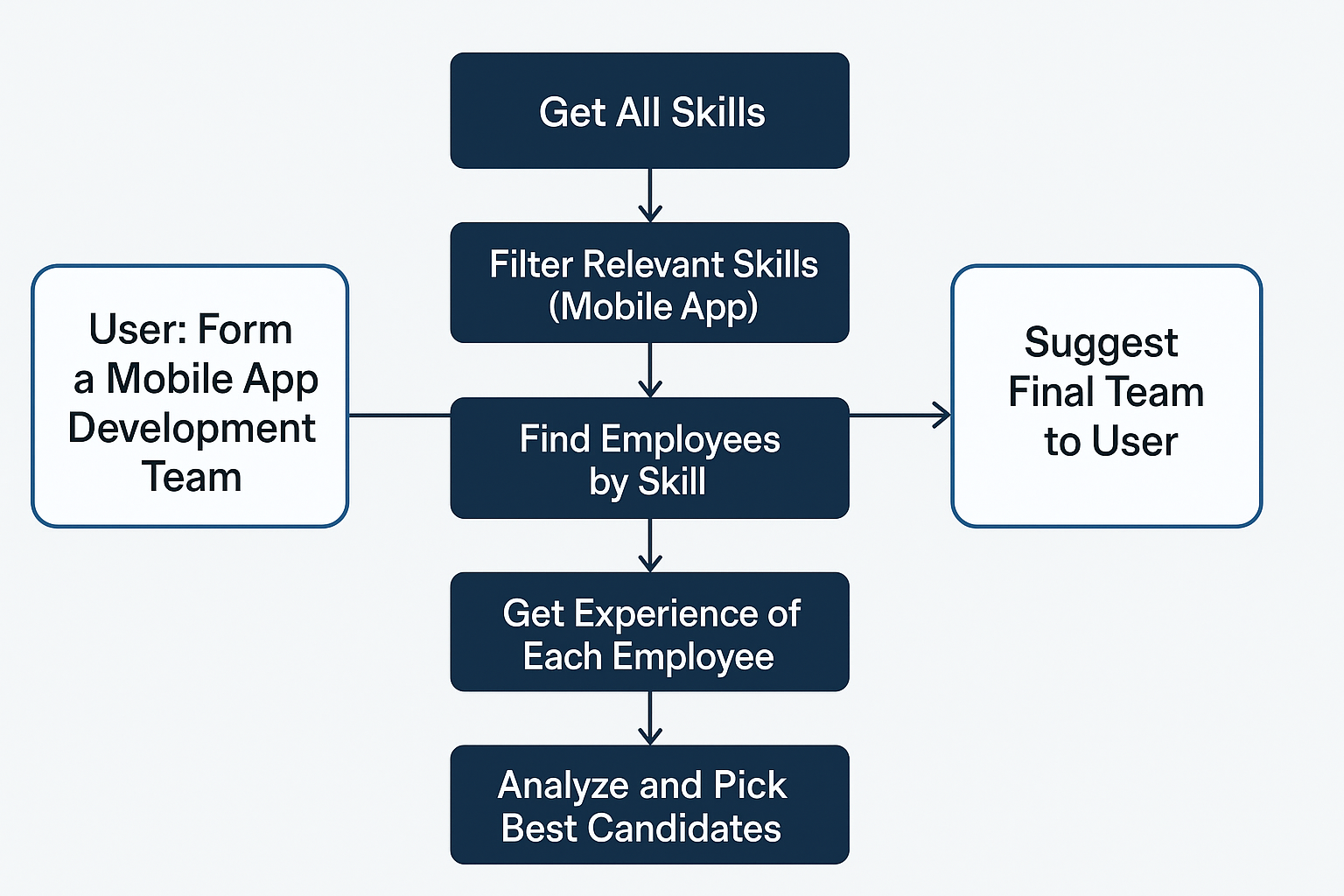

To give a better example of how the Agent should operate here is the thinking process I was aiming

- User Asks: “Form a team for a Mobile App Development project”

- Agent Phase 1: Retrieve Skills

- Query: get_all_skills

- Purpose: Get all available skills from the company’s graph database (Neo4j).

- Agent Phase 2: Analyze & Filter Relevant Skills

- Internally analyze the full skill list (e.g., match skills like React, React Native, Flutter, Kotlin, Swift).

- Select only skills relevant for Mobile App Development.

- Agent Phase 3: Find Employees for Each Skill

- For each selected relevant skill:

- Query: get_persons_by_skill(skill_name)

- Collect potential candidates for each key skill.

- For each selected relevant skill:

- Agent Phase 4: Assess Candidates

- Use experience/history data:

- Query: get_experience_for_person(person_name)

- Possibly: get_all_position_details_for_person(person_name)

- Analyze seniority, past companies, project relevance.

- Use experience/history data:

- Agent Phase 5: Form the Team

- Select best-fit candidates covering all required skills.

- Try to minimize overlaps and maximize complementary skills.

- Respond to User: Suggest the final team and explain why those candidates were selected.

Engineering the Knowledge Graph with YAML

Instead of two or three basic queries, I designed a full Personnel Management Knowledge Graph.

You can find the full YAML here: Public Gist – Neo4j Personnel Tools

But here is a taste of it:

sources:

my-neo4j-personnel-db:

kind: "neo4j"

uri: "bolt://127.0.0.1:7687"

user: "neo4j"

password: ${PASSWORD}

database: "neo4j"

tools:

get_persons_by_multiple_skills:

kind: neo4j-cypher

source: my-neo4j-personnel-db

statement: |

MATCH (p:Person)

WHERE size($skill_list) > 0

AND ALL(skillName IN $skill_list WHERE EXISTS((p)-[:HAS_SKILL]->(:Skill {name: skillName})))

RETURN p.name AS personName

parameters:

- name: skill_list

type: arrayI didn’t stop at reading data either.

The agent can now:

- Link a skill to a person

- Add new skills

- Update a person’s contact information

- Retrieve full job histories, including responsibilities

- List past companies and active clients

In short — it thinks in graph structures, not tables.

Designing a True Multi-Turn Agent

Building the tool definitions was one part, building the actual Agent Framework was another.

You can find the full code here: Public Gist – Neo4j Agent Framework

The design focuses on Agentic Thinking:

# Main Agent Loop

for iteration in range(MAX_AGENT_ITERATIONS):

user_prompt_content = Content(role="user", parts=[Part.from_text(text=user_query)])

history.append(user_prompt_content)

response = genai_client.models.generate_content(

model="gemini-2.0-pro",

contents=history,

config=GenerateContentConfig(

system_instruction=system_instruction if iteration == 0 else None,

tools=genai_tools,

tool_choice=FunctionCallingConfigMode.AUTO,

),

)

# Function call detection and execution

for function_call in response.function_calls:

result = await call_tool(function_call, toolbox_tools)

history.append(result_as_tool_content(result, function_call.name))

# Final response

print(response.text)In this code block, perhaps it is the most important aspect of the agent. This iteration loop is not just a coding trick – it’s a critical part of how the agent reasons. Each iteration allows the agent to:

- Absorb new information (user query, function results)

- Adjust its thinking based on previous responses and tool outputs

- Plan next steps dynamically (e.g., if a skill search returns no people, try fallback strategies)

- Chain multiple tool calls across different logical phases (skills ➔ people ➔ experience ➔ selection)

Instead of treating the conversation as a one-shot request-response, the agent uses multiple reasoning cycles to solve more complex, multi-step tasks, like forming a Mobile App Development team across several database queries.

The Role of the Prompt: Forcing the LLM to Make Decisions

In agent systems like the one I built, the system prompt is absolutely critical. It’s not just about “being helpful” — it’s about forcing the model to take ownership of decisions. In my case, the prompt explicitly instructs the agent to:

- Synthesize multiple pieces of information (skills, experience)

- Analyze relevance (is a skill truly useful for the goal?)

- Prioritize (select candidates based on past work, not randomly)

- Make final selections without asking for endless clarifications

Why this matters:

By forcing the LLM to decide — not just suggest — we eliminate weak behaviors like:

- Returning vague answers

- Asking the user for every small confirmation

- Stopping after the first incomplete tool call

Instead, the model is empowered to reason, to plan tool calls intelligently, and to commit to actions that move the conversation forward productively. In effect, the prompt elevates the LLM from a passive responder to an active, autonomous agent.

Instructions:

- Use the available tools (functions) based on the user's request to query or modify the Neo4j database.

- You may need to chain multiple tool calls to answer complex questions. First, figure out what information you need, call the necessary tools one by one, analyze the results, and then formulate your final answer.

......

- Be concise and factual based on the information retrieved from the database.

- If you cannot find the requested information using the tools, clearly state that.

- Do not guess information or make assumptions beyond what the tools provide.

- When you think you have all the information needed do not call a function just feed the answer to the user

......

- Do not prompt the user for information if you can call a function to get the information your self. Make assumptions beyond what the tools provide.

......

“Without a strong prompt, the LLM only answers.

With the right prompt, the LLM decides, reasons, and acts — like a true agent.”

Final Thoughts

Google’s GenAI Toolbox opened the door. But what you do after opening the door is up to you. I wanted to show what’s possible if you treat agents seriously — not just as query engines, but as dynamic, reasoning entities over knowledge graphs.

If you’re exploring AI agents, Neo4j, or LLM integration into your apps, feel free to reach out. I’d love to hear what you’re working on.

🔗 YAML + Agent Code: Public Gists Here 🔗 GenAI Toolbox for Databases 🔗 GenAI Toolbox Github

Until next time,

– Tsartsaris Sotirios